Small-scale unmanned aerial vehicles (UAVs) are more and more exploited in civil and commercial applications for monitoring, surveillance, and disaster response.

Equipped with cameras and other sensors, these autonomously flying robots can quickly sense the environment from a bird's eye view or transport goods from one place to another.

Such systems support, for example, first time responders in case of disasters - flooding, mudslide, forest fire, and earthquake - to quickly assess the situation and

coordinate action forces.

Small-scale unmanned aerial vehicles (UAVs) are more and more exploited in civil and commercial applications for monitoring, surveillance, and disaster response.

Equipped with cameras and other sensors, these autonomously flying robots can quickly sense the environment from a bird's eye view or transport goods from one place to another.

Such systems support, for example, first time responders in case of disasters - flooding, mudslide, forest fire, and earthquake - to quickly assess the situation and

coordinate action forces.

Check out uav.aau.at for more information on multi-UAV research at Klagenfurt University.

For some applications, it is beneficial if a team of coordinated UAVs rather than a single UAV is employed. Multiple UAVs can cover a given area faster or

take photos from different perspectives at the same time. This emerging technology is still at an early stage and, consequently, profound research and development efforts are needed.

Being part of the research cluster Lakeside Labs, a team of seven researchers and four professors in Klagenfurt has been working

on multi-UAV systems since 2008.

The team has developed a system that provides functionality similar to Google Earth and Microsoft Virtual Earth but capturing small areas with much higher resolution:

A user first outlines the area of interest on a map. The UAVs fly over the specified area, take images, and provide an accurate and up-to-date overview picture of the environment.

"Our solution requires mission planning and coordination for multiple aerial vehicles," explains senior researcher Markus Quaritsch. The system computes the flight routes for the

individual UAVs taking into account the maximum flight time due to battery constraints. A flight route consists of a sequence of waypoints specified in GPS coordinates, the flight

altitude, and a set of actions for every waypoint such as taking a photo, setting orientation. The UAVs autonomously fly according to the computed plan without any need for human

interaction.

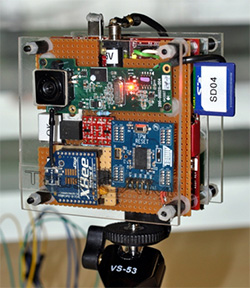

The UAVs are equipped with different sensors (e.g., thermal camera, conventional photo camera) to build a multi-layered overview image. The pictures taken are pre-processed on board

the UAV and sent to the ground station during flight. At the ground station the individual pictures are mosaicked to a large overview image. The pictures show significant perspective

distortions due to the low altitude of less than 150 meters. Under guidance of Bernhard Rinner, the team has developed and patented an incremental approach for computing the

overview picture that provides quick feedback to the user and is geometrically correct and visually appealing at the same time.

The real-world applicability of the system is a major objective. It was demonstrated several times in coordinating fire fighters performing service drills.

"We recently participated in a large-scale forest fire exercise in the Austrian-Slovenian border region," Quaritsch says. "Other demonstrations included the monitoring of a large

construction site near Vienna and the observation of an industrial accident". The lessons learned by such applied research will further the work of a recently founded spinoff company.

Key Publications

-

Daniel Wischounig-Strucl, Bernhard Rinner.

Resource Aware and Incremental Mosaics of Wide Areas from Small-Scale UAVs.

Machine Vision and Applications,

pages 20, 2015.

-

Asif Khan, Evsen Yanmaz and Bernhard Rinner.

Information Exchange and Decision Making in Micro Aerial Vehicle Networks for Cooperative Search.

IEEE Transactions on Control of Networked Systems,

pages 12, 2015.

-

Saeed Yahyanejad and Bernhard Rinner.

A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs.

ISPRS Journal of Photogrammetry and Remote Sensing,

pages 14, September 2014.

-

Markus Quaritsch, Karin Kruggl, Daniel Wischounig-Strucl, Subhabrata Bhattacharya, Mubarak Shah and Bernhard Rinner.

Networked UAVs as Aerial Sensor Network for Disaster Management Applications..

e&i Elektrotechnik und Informationstechnik,

127(3) pages 56-63, 2010. Springer.

Research Team

- Bernhard Rinner (PI)

- Asif Khan (PhD student)

- Markus Quaritsch (2008-2012)

- Omair Sarwar (PhD student)

- Jürgen Scherer (PhD student)

- Daniel Wischounig-Strucl (2009-2013)

- Saeed Yahyanejad (2010-2015)

Projects

The objective of the CLIC (Closed-Loop Integration of Cognition, Communication and Control) project is to integrate real-time image analysis, adaptive motion control, and synchronous communication between the imaging and control subsystems. This integration is demonstrated on optimizing the trajectory control of a crane additionally equipped with distributed smart cameras observing the crane's environment.

The objective of the CLIC (Closed-Loop Integration of Cognition, Communication and Control) project is to integrate real-time image analysis, adaptive motion control, and synchronous communication between the imaging and control subsystems. This integration is demonstrated on optimizing the trajectory control of a crane additionally equipped with distributed smart cameras observing the crane's environment.